Towards Exascale in Engineering

Niclas Jansson & Jonathan Vincent, PDC, and Timofey Mukha, Adam Peplinski, Saleh Rezaeiravesh & Philipp Schlatter, KTH Engineering Mechanics

The European Centre of Excellence (CoE) for Engineering Applications, EXCELLERAT, is one of the European CoEs aiming at supporting key engineering industries in Europe and promoting interaction between academic research and code development with industrial workflows. EXCELLERAT thus focuses on complex computational fluid dynamics (CFD) simulations using high-performance computing (HPC) technologies and answers the engineering community’s needs by providing expertise in various aspects of numerical modelling from application development to performing simulations and data analysis. It also provides a bridge between industrial partners, computer centres and the academic community.

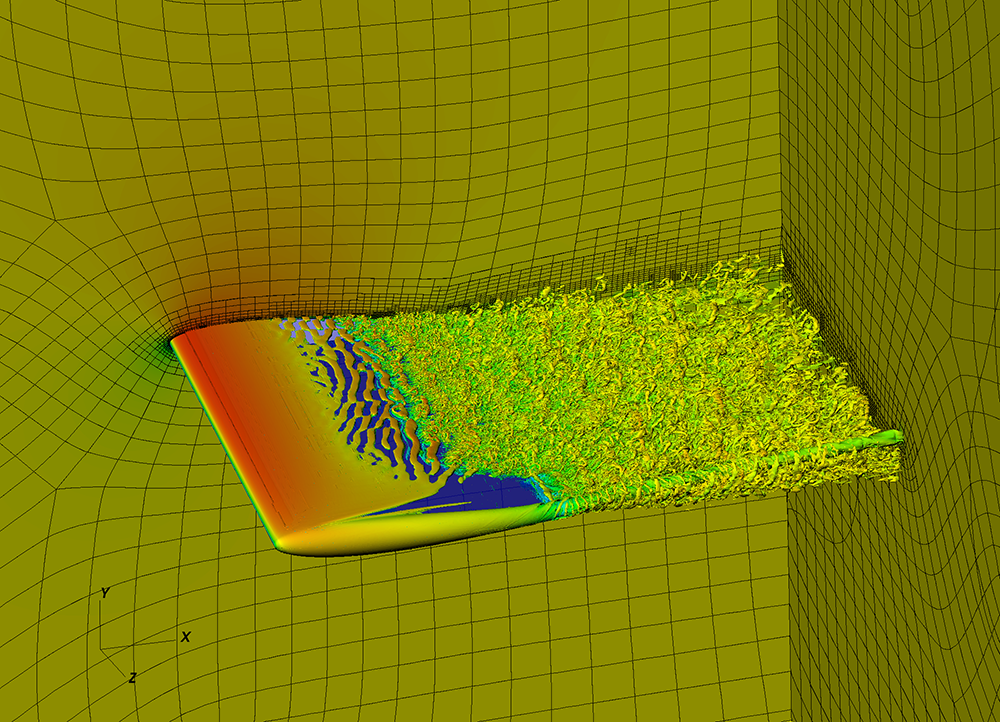

In the field of turbulence simulations, NEK5000 is one of the six reference codes selected for EXCELLERAT. It is a high-fidelity CFD solver based on a high-order Spectral Element Method (SEM) used for modelling a number of different flow cases, including industrially relevant applications, such as nuclear reactor thermal-hydraulics. An important method improvement in the code was the implementation of an Adaptive Mesh Refinement (AMR) algorithm that makes it possible to control the computational error during a simulation and reduce it at minimal cost by proper mesh adjustment. One of the set goals of EXCELLERAT was to extend this AMR method in NEK5000 to fully three-dimensional flows making it a robust solver capable of adaptively solving complex industrially relevant problems. A number of different aspects of numerical modelling were considered here starting from mesh generation and solver parallel performance, through solution reliability (Uncertainty Quantification) and ending with in-situ data analysis and visualization. After three years of work, EXCELLERAT can present its developments in the form of mature tools, and the results of the flow simulations performed with relatively complex and industrially relevant cases, such as the simulation of a flow around a three-dimensional wing tip. Although this flow case is relevant for a number of industrial areas, like aeronautics, automotive or bio-engineering, there is an apparent lack of high-fidelity numerical data for high-Reynolds-number turbulent flows. The second main case we considered was performed in cooperation with CINECA and involved the simulation of rotating parts, in particular, a drone rotor with an Eppler E64 wing profile.

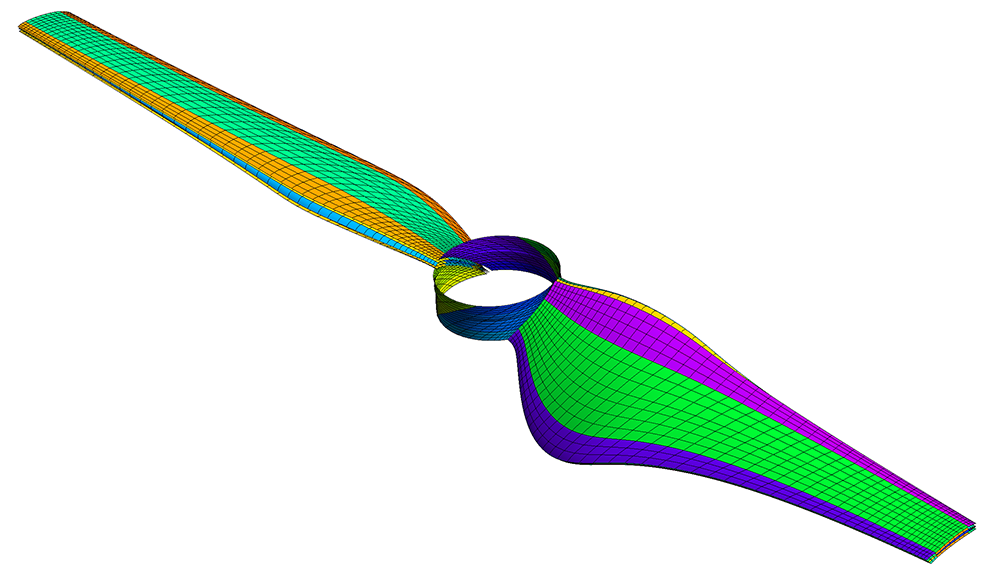

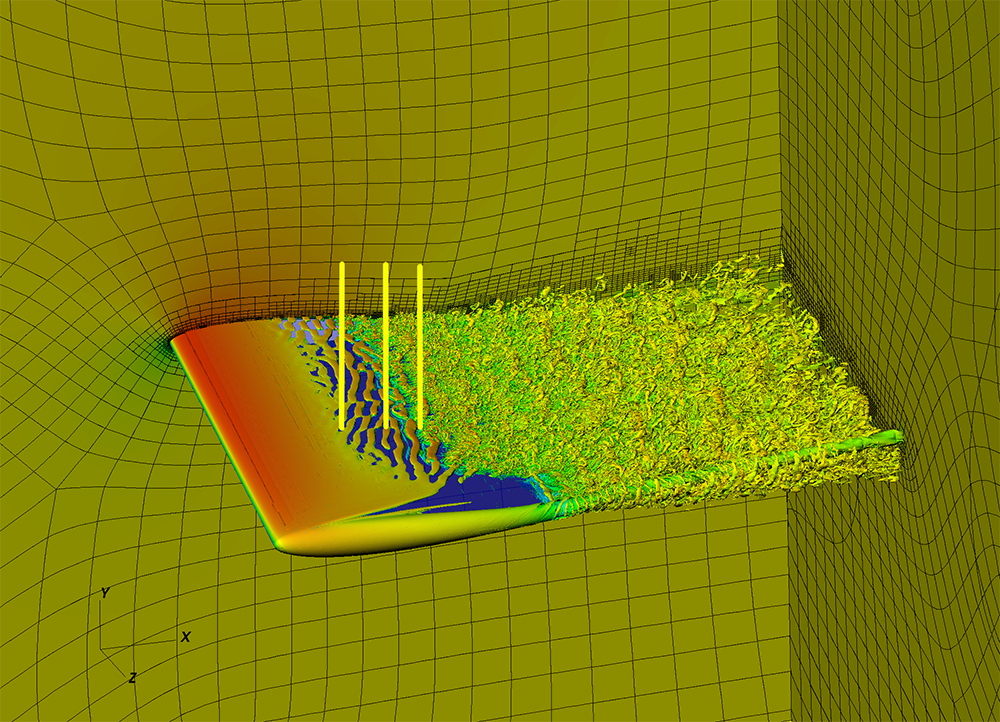

We started by creating a work flow for the pre-processing step including hex-based mesh generation with the widely used gmsh library, and then developed proper tools for the simulation initialization. The workflow was used to generate suitable NEK5000 meshes for both the NACA 0012 aerofoil with a rounded wing tip and the drone rotor. There were multiple issues we had to deal with such as the proper definition of relative element orientations, and the proper projection of the grid points located at the domain boundary onto the prescribed surface. However, the most challenging and time-consuming step was the generation of a hex-based mesh. On the one hand, our AMR capability simplifies the whole meshing process providing flexibility of non-conforming meshes, but at the same time it makes meshing more demanding, as efficient AMR requires a relatively coarse initial mesh that still properly represents the domain geometry. The resulting meshes are shown in the images below. The upper image shows the mesh on the rotor blade, while the lower image presents a vortical structure of the transient flow around the NACA0012 aerofoil with a rounded tip for Re=105. Black lines mark the elements’ boundaries; each element consists of (N+1)×(N+1)×(N+1) grid points, where N is a chosen polynomial order. In the lower image, we can clearly appreciate the regions with increased resolution, identified by our AMR procedure.

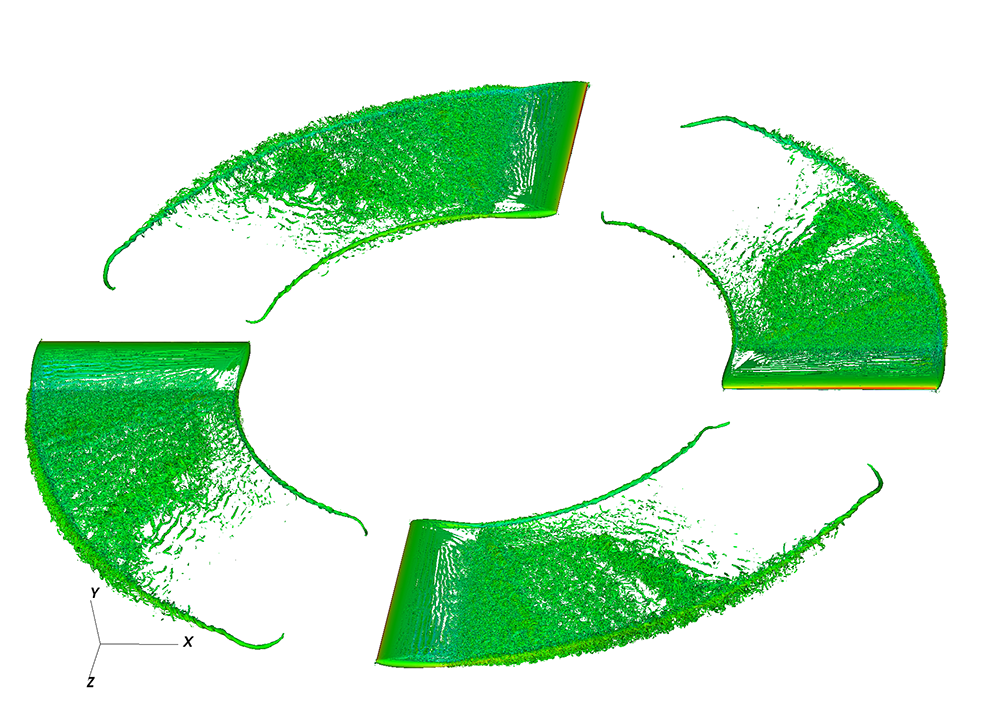

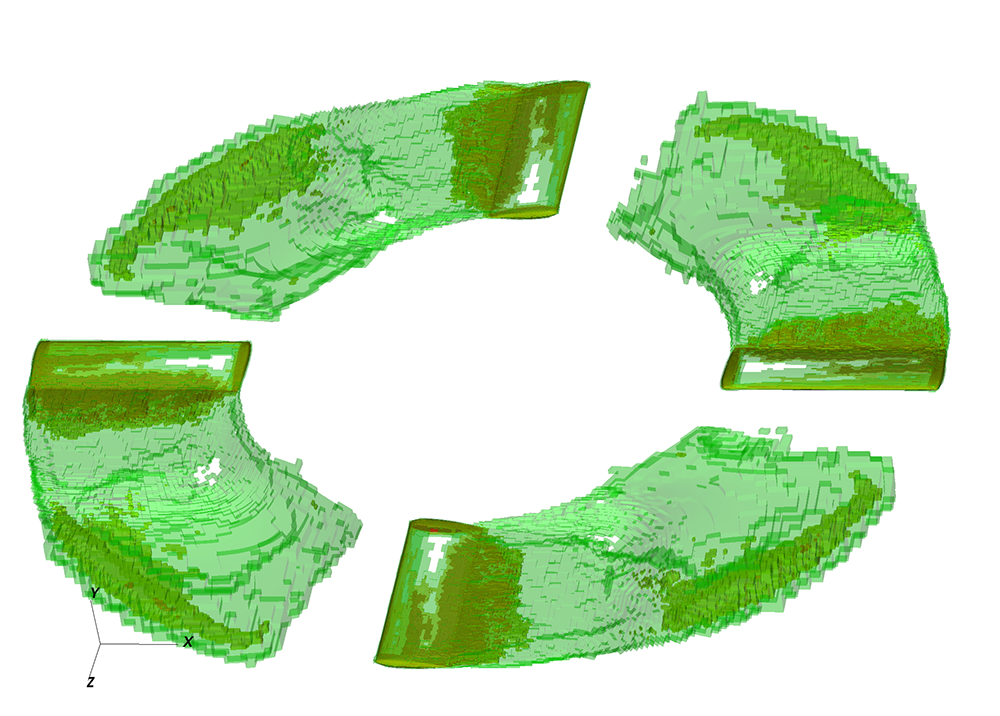

We also worked on assessing the parallel performance of our AMR implementation in NEK5000 by looking into, for example, different mesh partitioners (ParMetis and ParRSB), and communication patterns in the pressure preconditioner. All the modifications that have been described enabled us to perform fully adaptive AMR simulations for the two use cases. The figures below show the results of a preliminary “toy” rotor simulation, at a Reynolds number Re=100,000 and with a rotor built out of four blades with NACA0012 aerofoils and rounded wing tips. These figures present the vortical structures of the transient flow and domain sections occupied by different refinement levels.

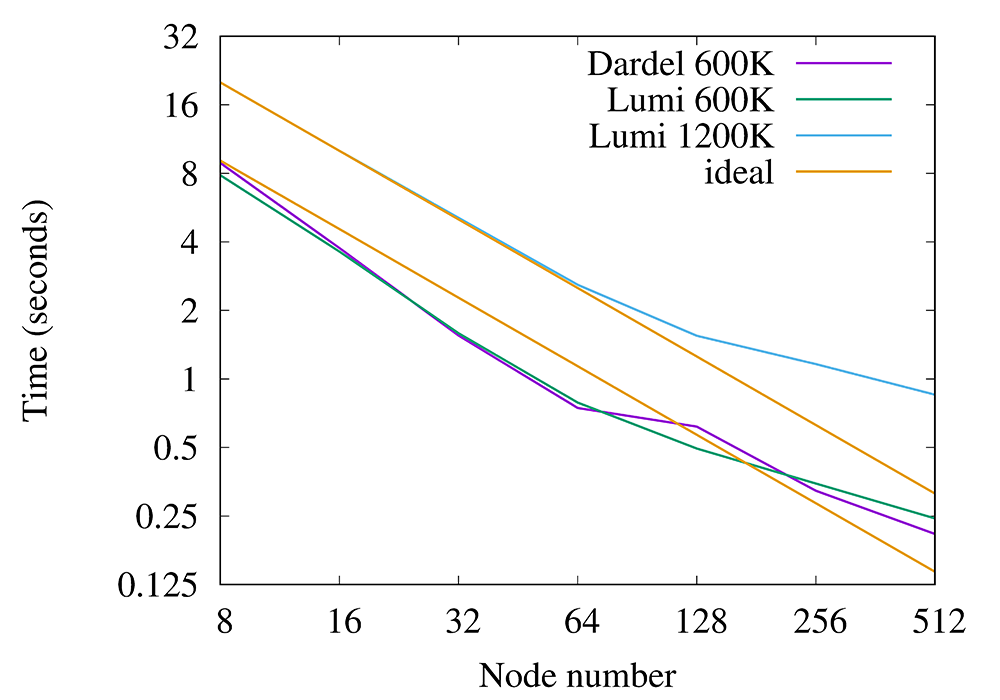

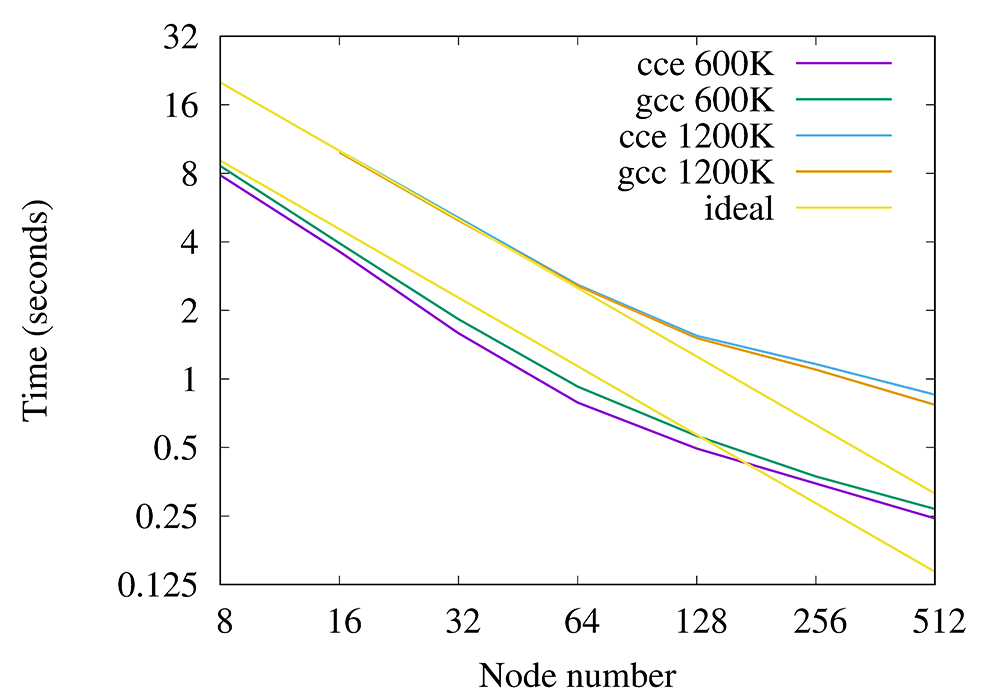

The toy rotor case was tested on the CPU partitions of the new supercomputers Dardel (at PDC) and LUMI (at CSC in Finland); we had access to both machines during their respective pilot phases. Two different setups were used: the smaller one with 631,712 elements and the larger with 1,262,258 elements, both with polynomial order 7. The data for strong scaling is presented in the two graphs below showing that our AMR branch of NEK5000 preserves the good scaling properties of its conformal version. On Dardel we achieved parallel efficiency of 0.7 on 512 nodes (out of 554 available nodes) with only 10 elements (5,120 grid points) per MPI rank. The upper graph shows results for both Dardel and LUMI obtained using the native Cray compiler, while the lower graph presents LUMI results obtained with Cray and GNU compilers. Both plots show the average execution time per time step and an ideal scaling curve.

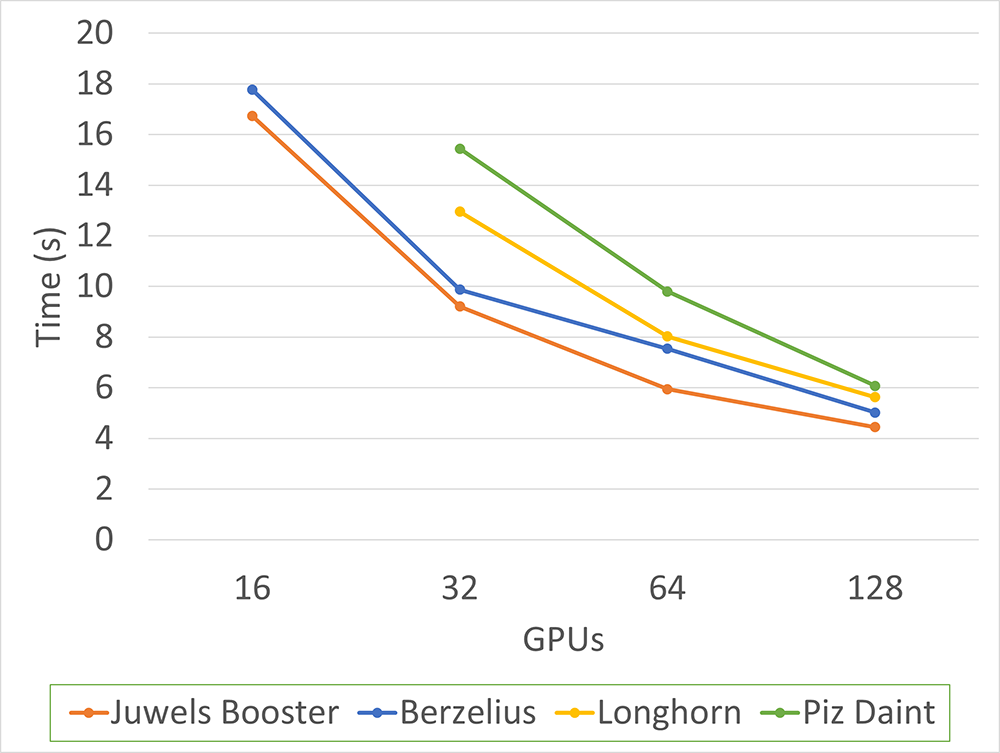

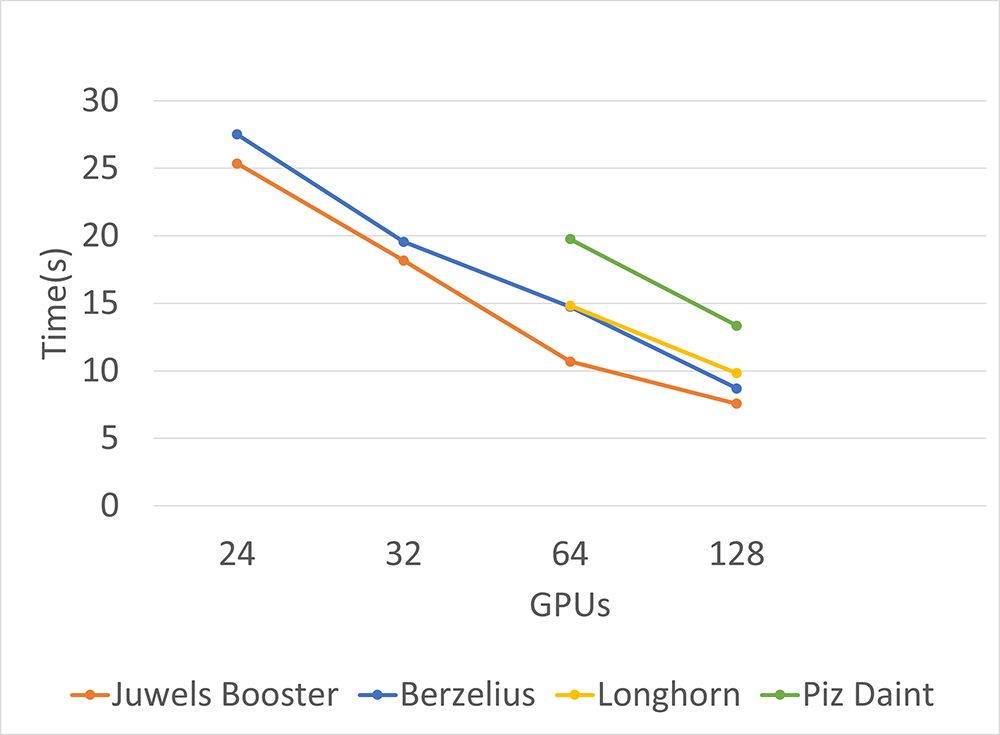

Another important task for EXCELLERAT has been to improve NEK5000’s exascale readiness, particularly with respect to accelerators. As a starting point, we used an OpenACC version of the proxy-app Nekbone, and used the experience gained from that to create an OpenACC + CUDA implementation of NEK5000. The graphs below show the results of the OpenACC NEK5000 implementation on several different GPU-based systems.

As further work we are looking at optimizing the kernels and moving more work to the GPUs to further improve performance. As part of the work to get NEK5000 working on the GPU partition of Dardel, we are also in the process of creating a version of NEK5000 that uses OpenMP for GPU offloading. This is important as this is more suitable for running on the AMD GPUs that will be installed on the Dardel system.

Even on exascale machines and with the algorithmic improvements in efficiency described above, simulations of wall-bounded flows at very high Reynolds numbers will remain prohibitively expensive if the whole boundary layer has to be resolved in space and time. To circumvent this, we have started to implement modern wall modelling in NEK5000. Wall models act as a substitute for resolving the smallest turbulent scales near the wall, thereby dramatically reducing the scaling of the grid size with the Reynolds number. Currently, we are testing our implementation on canonical wall-bounded flows, with the aim of applying wall models to the flow cases described earlier.

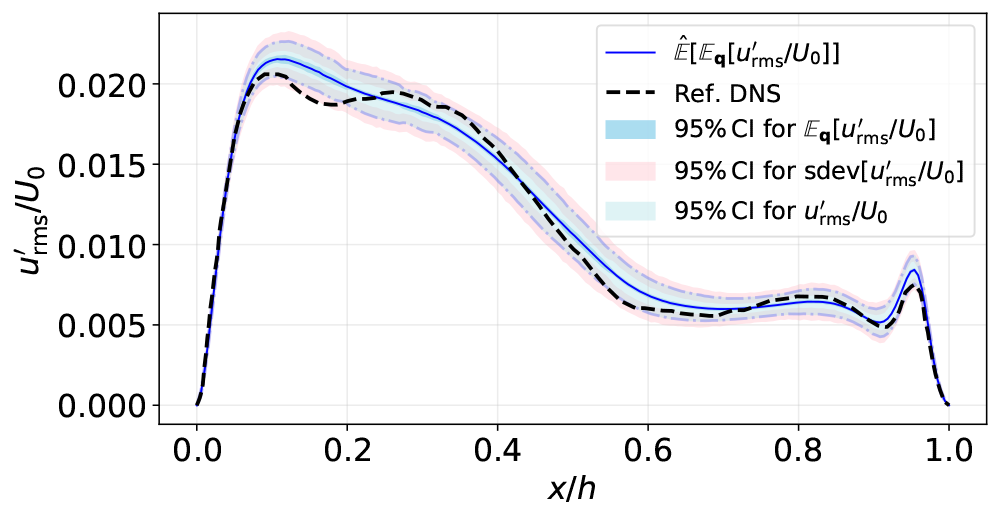

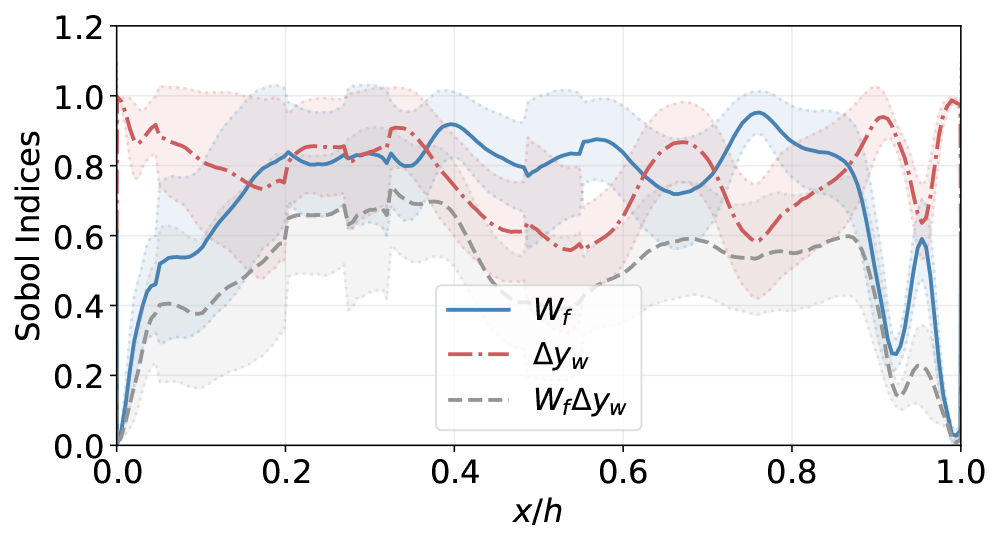

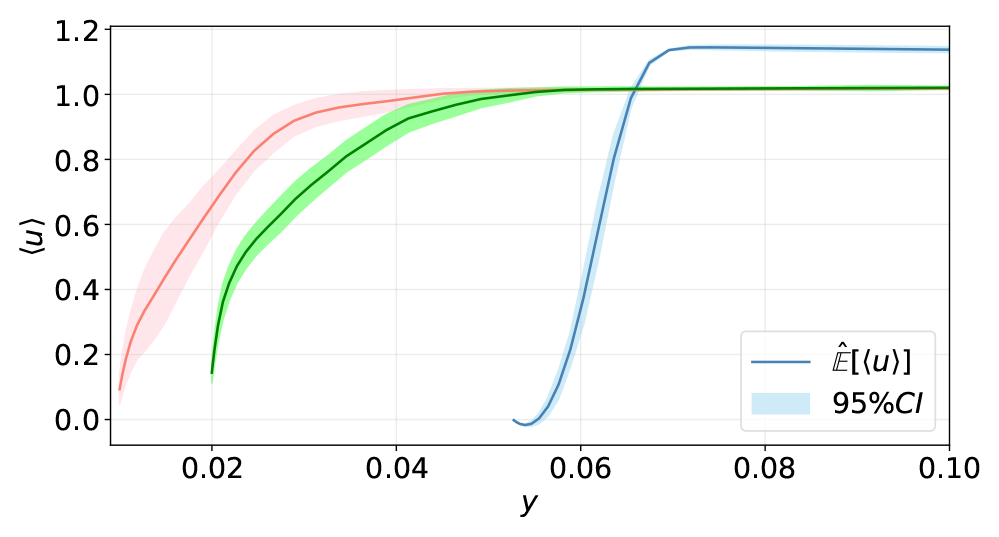

It is both important and interesting from the engineering design point of view to assess the sensitivity and robustness of scale-resolving simulations of fluid flows with respect to the variation of numerical, modelling, and physical parameters. There are also run-time factors that influence the reliability of the simulation results. An example is the uncertainty induced in the time- averaged quantities of turbulence simulations due to the finite number of samples included in the averaging – see, for example, the figure below. Since industrially relevant flows are computationally expensive and cannot be run for very long time-intervals, estimating confidence in the simulations is required to assess when a given simulation may be stopped when it reaches a specified confidence level. Another aspect, which makes the formal assessment of uncertainties and sensitivities in CFD even more important, is the emergence of data-driven methods such as Bayesian optimization where the algorithm is driven by the uncertainties. The progress in HPC makes it possible to apply such data-driven methods to large-scale flow simulations.

To achieve these goals of assessing uncertainty and robustness, relevant techniques in the fields of uncertainty quantification (UQ) and computer experiments can be employed. Implementing such techniques for CFD applications, in general, and the simulation of turbulentflows, in particular, has led to the development of UQit which is a Python package for uncertainty quantification. UQit has been developed and released in open-source under the aegis of EXCELLERAT so the wider research community can take advantage of it. Generally speaking, UQit can be non-intrusively linked to any CFD solver through appropriate interfaces for data transfer. The main features that are currently available in UQit are as follows: standard and probabilistic polynomial chaos expansion (PCE) with the possibility of using compressed sensing, analysis-of-variance-based (ANOVA-based) global sensitivity analysis, Gaussian process regression with observation-dependent noise structure, and batch-based and autoregressive models for the analysis of time-series. The flexible structure of UQit allows for combining different UQ methods and easily implementing new techniques. Therefore, UQit is planned to be continuously updated.

To demonstrate the importance of UQ in assessing accuracy, robustness and sensitivity of the flow’s quantities of interest (QoIs) when the design parameters are allowed to vary, we have used UQit in different computer experiments using NEK5000. A novel aspect of the analysis is that we have been able to combine the uncertainty in the training data, for instance due to finite time-averaging, with the variation of numerical and modelling parameters. For instance, see the following figure which shows the combined propagation of uncertainty into a turbulent lid-driven cavity flow simulated by NEK5000. The analyses have been performed by probabilistic polynomial chaos expansion and Sobol sensitivity indices. The developed framework has also been applied to compare the performance of two widely-used open-source CFD solvers, NEK5000 and OpenFOAM, through the evaluation of different UQ-based metrics.

UQit has also been employed in collaboration with an EXCELLERAT partner to develop the workflow required for conducting online/in-situ UQ analyses relying on CFD simulations on HPC systems. The objectives can be diverse, including UQ and sensitivity analyses, constructing predictive surrogates and reduced-order models, developing data-driven models, and performing robust optimization. Currently, the collaboration has led to the successful development and implementation of novel algorithms for in-situ estimation of time-average uncertainties. Due to these streaming estimators, storing time-series data of flow simulations can be avoided.

We will continue our work within EXCELLERAT combining the presented tools and making use of them during the final simulation of our use case. As a first step, we will enable in-situ analysis for non-conforming meshes produced by AMR runs, together with a UQ analysis of the time averages.

References

- N. Offermans, A. Peplinksi, O. Marin, P. Schlatter, Adaptive mesh refinement for steady flows in NEK5000. Comp. Fluids,197, 104352, 2020.

- S. Rezaeiravesh, R. Vinuesa, P. Schlatter, UQit: A Python package for uncertainty quantification (UQ) in computational fluid dynamics (CFD), Journal of Open Source Software 6 (60), 2871, 2021.

- J. Vincent, et al.: Strong scaling of OpenACC enabled NEK5000 on several GPU based HPC systems. In proc. International Conference on High Performance Computing in Asia-Pacific Region (HPC Asia 2022), Virtual Event, Japan, 2022. doi.org/10.1145/3492805.3492818 .